Python Machine Learning

Python is easy. Machine Learning can get easy with Python. Relatively speaking, Machine Learning Models are easier to develop in Python.

This is a part of the series of blog posts related to automated creation of Machine Learning Models, and Datasets used for training Neural Networks. If you are interested in the background of the story, or how intro was, what was the Python code behind it, you may scroll to the bottom of the post to get the links to previous blog posts. You may also head to Use SERP Data to Build Machine Learning Models page to get a clear idea of what kind of automated Machine Learning Models you can create, or how to improve your Data Science project.

In previous weeks I have made a tutorial on how to automate the creation of Deep Learning algorithms for Machine Learning Models training with an automated API. This week I will give a step by step creation of Neural Networks in an easy to understand way with visualization.

Is Python Good for Machine Learning?

Python supports commonly used Machine Learning libraries like Keras, Pytorch, Tensorflow, Tensorflow 2, Scikit-Learn, and supporting Data-Science Python libraries Scipy, Numpy, Matplotlib, Pandas etc. From creation of Neural Networks, to visualization of Machine Learning process, Python for Machine Learning is one of the fundamentals for learners of Machine Learning with a variety of open source code, tutorials, and real-world applications.

On top of all of these Python is a great programming language for making a simple or big scale API. Variety of Python libraries also create a unique opportunity to meet different branches of computer science. A data scientist who is doing data analysis, a programmer who is doing python programming, learners who are starting from scratch can meet in the same project, and share variety of metrics and information without much hassle.

In the scope of this tutorial, we are training an Image Classifier Machine Learning Algorithm. For new readers, we have used SerpApi’s Google Images Scraper API to get the images of American dog species with 500 width and 500 height, and also specified with a dog chips parameter which allows selecting only dog images. Using SERP data is a great way to reduce the amount of time you spend on preprocessing, and make predictions with less noise. You may Register to Claim Free Credits.

Is Machine Learning in Python Hard?

Python is easy. Machine Learning can get easy with Python. Relatively speaking, Machine Learning Models are easier to develop in Python. It also supports rich libraries with Machine Learning Algorithms for Natural Language Processing, Data Visualization, SERP and Preprocessing.

In order to make things more easier with Python, this week I have created a step by step form that helps you create Neural Networks for Machine Learning. In my opinion, Python has the potential to cure what is hard about Machine Learning. If we allow the person who is interested in Machine Learning to create in a way that suits, easier to grasp, and with limited details at each step of the creation of a Deep Learning algorithm; and allow the ability to fail and succeed, make Cross Validations at scale, it will inevitably lead to a better grasp of Machine Learning. Of course, I will have to add different types of methods to enter details of a training process. For me, personally, to train a classifier, I wouldn’t want to take five steps one by one to create Linear Regression. I’d prefer a JSON I can manipulate, or a form where every hyper-parameter of a Linear Regression is in one place for me to easily fill, just like SerpApi’s Playground:

I am just a learner, and not an expert on Machine Learning as well. But that’s my preference. Without further ado, let’s dive into the form.

Machine Learning Model

I have created a way to use either a previously customized Machine Learning Algorithm by its Python class name, or create a custom algorithm by creating your neural networks layer by layer. If you choose the class name, the layer creation won’t show up. So we will pick the custom method for now:

Building Blocks of a Neural Network

You may choose the type of layer for your Neural Networks, define hyper-parameters, and decide if you would like to add a new layer or not. Only the layers we will use in this Tutorial is available for now. But it should give a good idea of how creating Machine Learning Algorithms work.

In the future, I plan to support layer types and hyper-parameters necessary to create popular Machine Learning Algorithms such as KNN(K-Nearest Neighbor), K-Means Clustering, SVM(Support Vector Machines), or Hierarchical Clustering, Random Forest, Decision Trees, Logistic Regression and various other Classification Algorithms, and Regression Algorithms.

You may also use predefined keywords such as auto to get the in-channels size or in-features size of a layer, or use n_labels to use label size. Especially, in dealing with Dimensionality Reduction while trying to calculate some hyper parameters manually could create an hindrance. Not all of the calculations are supported at this point. But it should give a clear idea on how to reduce complexity for the user when dealing with machine learning.

Criterion

I have only supported CrossEntropyLoss function of Python’s Pytorch library without any tweak so far. I called the name Criterion to represent a more broader term. Criterion usually refers to the function that is responsible for finding the minimum loss you can get from a Machine Learning training process. Error Function refers to the function responsible for showing the difference between the Machine Learning Model’s prediction, and real-world value. Objective function is the function you want to maximize or minimize in order to get a better prediction. Cost Function, or Loss Function refers to minimization of the Objective Function, meaning minimized loss between the prediction of Artificial Intelligence, and real-world example. For all of these algorithms responsible for us to evaluate the success of a training process, we will use the name Criterion.

Optimizer

Python’s Pytorch library we are using for this Machine Learning process, is supporting many types of optimizer with great customization options. Optimizer refers to the function responsible for adjusting the weights between Neural Networks in order to find a good fit for prediction. Learning Rate is an essential hyper-parameter of an optimizer that is vital to Machine Learning training process that is responsible for how much of a leap in learning should be taken at each prediction. When it is too high, the results will be odd, and incorrect. Often times with a high learning rate you may get less than 50 percent correct prediction in a situation of binary selection. If learning rate is too low, your training process won’t reach the absolute minima of the criterion. This will result in poorly trained Neural Networks. Momentum here represents the momentum of change in the learning rate when correct prediction is made.

Image Preprocessing

Although we don’t need a resizing, Python PIL library needs to resize the image(to its own size) before doing anti aliasing. You may write arrays into the text box, and it will convert itself into an array in the Python Dictionary responsible for training in the background. Resampling the images to RGB could help us use RGBA(Red-green-blue-alpha where alpha represents the opacity) images into RGB in order to reduce their vector dimension to 3 to make use of them. These kinds of operations could make significant changes in the utility of the dataset, or percentage of it being utilized. You may also skip the image operations completely.

Transformations

Transformations are the part where you adjust the tensorization of images necessary for Machine Learning calculations. I have only supported toTensor, and Normalize functions of the Python’s Pytorch library so far. Just like image operations, you may use an array in text format for mean and std of normalization process. You may also do transformations on the target in Machine Learning training process in order to get a better representation.

Label Names

In previous weeks, we have implemented a way to automatically gather preprocessed and labelled data with SerpApi’s Google Images Scraper API, using Python library of SerpApi called Google Search Results in Python. We stored the scraped images in a local N1QL Couchbase Server in order to implement future asynchronous processes. N1QL is a good data model for bringing the power of SQL in JSON form. We store the images with their label names in the server and fetch them automatically whenever a machine learning training or testing process is in place. For now, label names represent the query made on SerpApi’s Google Images Scraper API, one query per each line. In the future we will add automatic gathering of missing queries in the datasets before the training.

Batch Size, Epochs, Model Name

The rest of this form to create a Python dictionary with our commands is consisting of batch size, number of epochs, and model name. Batch size represents how many images should be fetched at each Deep Learning training session. Number of epochs is representative of how many times the each individual Machine Learning training process should repeat itself. Model name is the name of the file the trained Machine Learning Model will be saved on. Since we are using Python’s Pytorch library, it will be saved in .pt format.

Asynchronous Machine Learning

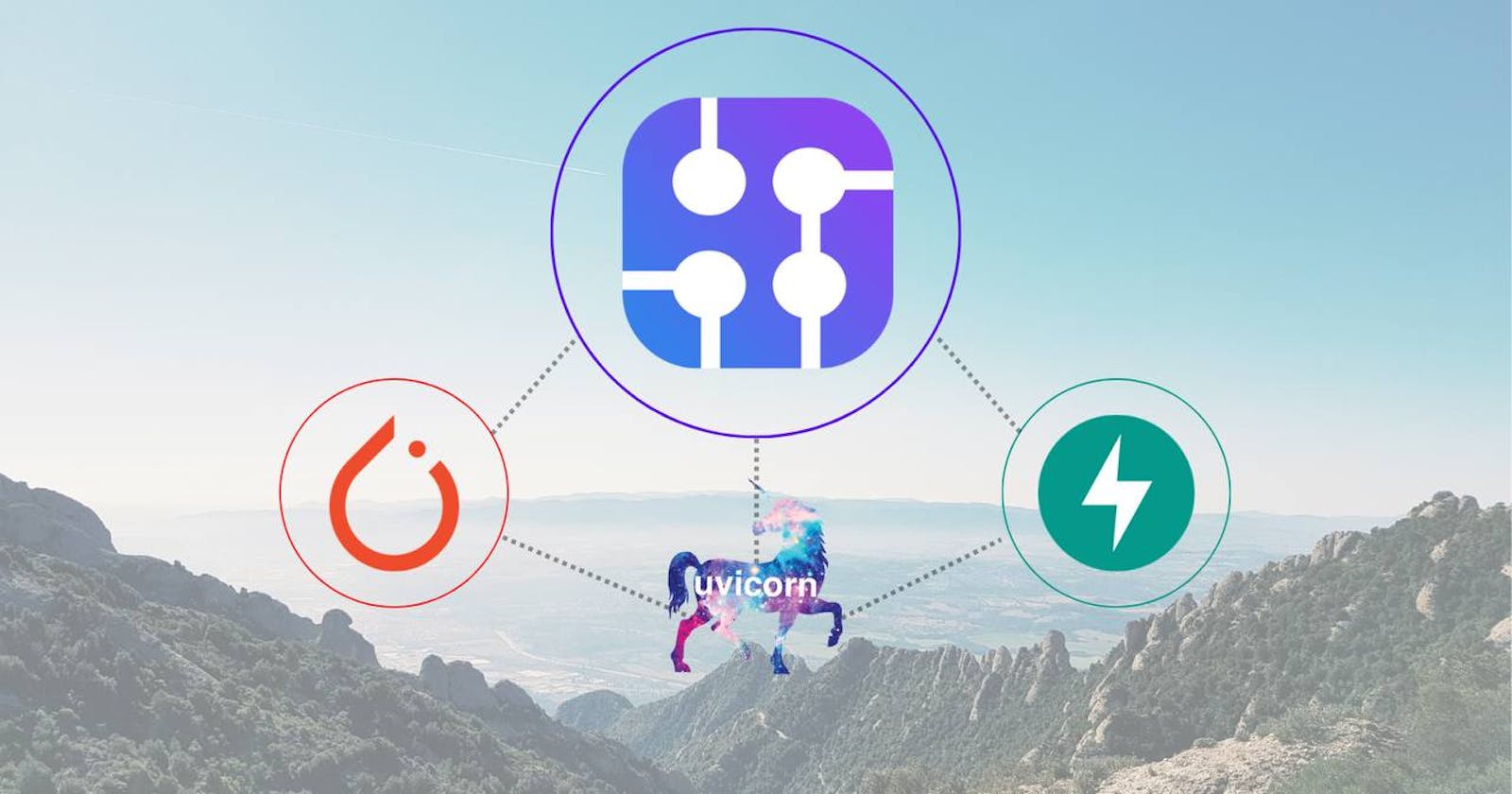

The moment we press the Begin Training button, we send an asynchronous request to train endpoint with the desired Machine Learning training parameters, and the Machine Learning training takes place on the background of FastAPI using Python. In the meantime, we may create another Deep Learning model, and once this one is done, it’ll automatically create a model file with the desired name.

This is useful for comparing changes in parameters of a Machine Learning Model in order to get a grasp of what an optimal Machine Learning training process should be like. In the following weeks we will add a new page with the live visualization data from the training process, and cross validation for different training processes on the same graph. These graphs will also include the metrics of the different processes, alongside training dataset.

I have used FastAPI’s Python Background Processes to achieve this:

@app.post("/train/")

async def train(tc: TrainCommands, background_tasks: BackgroundTasks):

def background_training(tc):

if tc.model['name'] != None and tc.model['name'] != "":

model = eval(tc.model['name'])

else:

model = CustomModel

trainer = Train(tc, model, CustomImageDataLoader, CustomImageDataset, ImagesDataBase)

trainer.train()

model = None

try:

torch.cuda.empty_cache()

except:

pass

background_tasks.add_task(background_training, tc)

return {"status": "Success"}

I will not be sharing the detailed codes of the actual page, and how it’s made. The reason is simple. I didn’t use React, and went on with the hard coded method. I don’t think it’d constitute a good example here. It’ll be public after I release the open source library.

Conclusion

I am grateful to the reader for their attention, and Brilliant People of SerpApi for making this writing possible, and the author of the codepen I repurposed for showcasing this week. I’d also be grateful if the reader would follow the series on any platform they have found (Linkedin, Medium, Tealfeed etc.). In the following weeks we will discuss visualization of training and testing processes, storing Machine Learning training data in the storage server, utilization of Unsupervised Learning, Reinforcement Learning, and further grasp on the Supervised Learning models, ability to use Kaggle Datasets.